Table of Contents

As enterprises scale their AI agent deployments from proof-of-concept to production, they face a fundamental challenge: how to maintain granular control over agent behavior without sacrificing development velocity or operational flexibility.

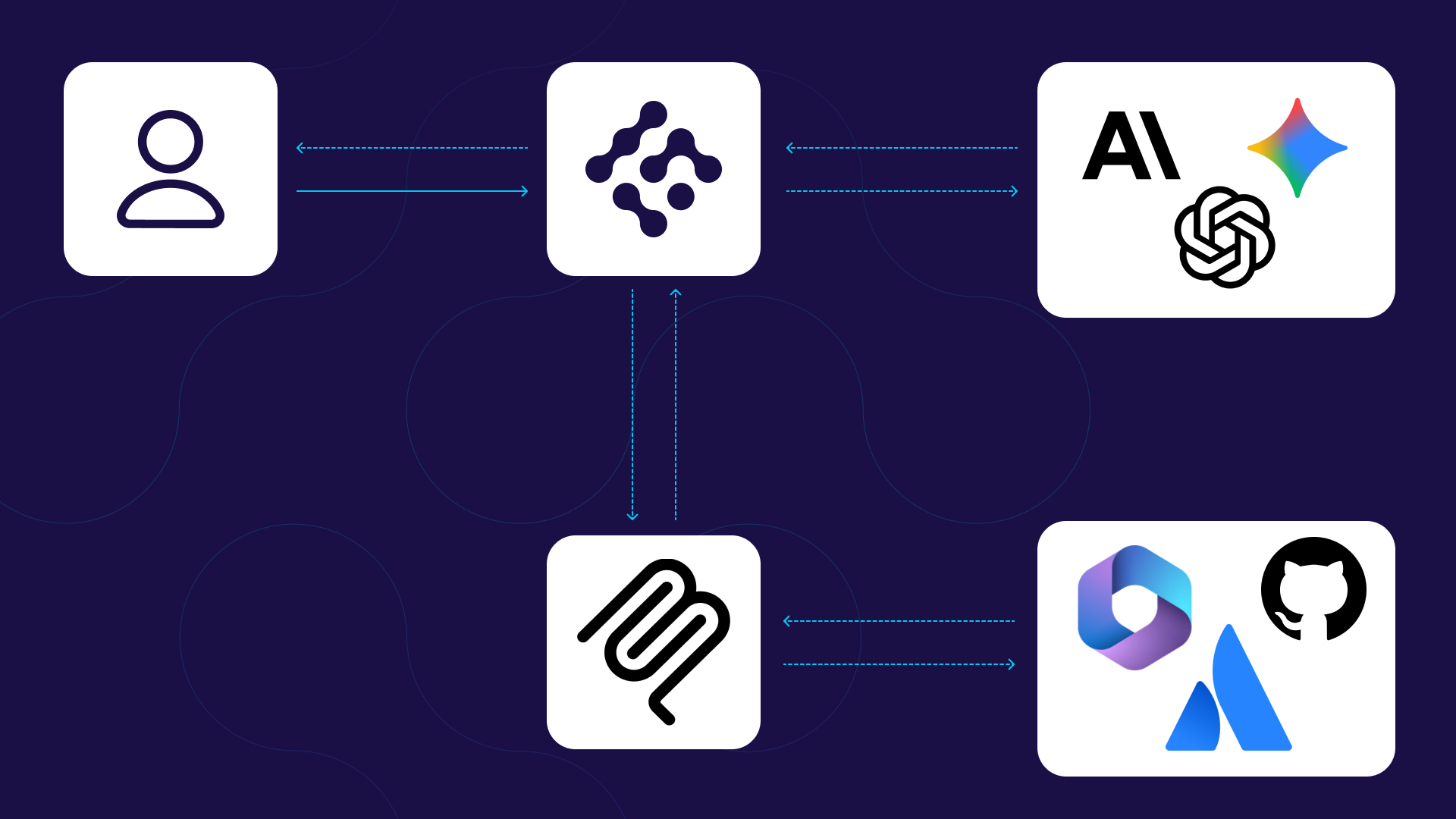

We’re excited to introduce Agent Constraints, a policy engine that fundamentally changes how organizations govern AI agents by shifting enforcement from the application layer to the infrastructure layer. Airia’s Agent Constraints can be applied to agents built on Airia and agents built on other agentic frameworks.

This blog explores the architecture, implementation patterns, and operational considerations that make Agent Constraints a game-changer for enterprise AI governance.

The Problem: Why Traditional Agent Security Falls Short

Guardrails Alone Are Not Sufficient

While AI guardrails have become table stakes for enterprise AI deployments, providing essential prompt filtering and response sanitization, they only address half of the security equation. Guardrails excel at content-level control: preventing prompt injection attacks, blocking toxic outputs, and ensuring responses align with corporate policies.

However, as organizations deploy increasingly autonomous AI agents with the ability to take actions, a critical governance gap emerges.

The Guardrails Gap: Traditional guardrails operate on text, what goes into and comes out of language models. But modern AI agents don’t just generate text; they execute actions through tools, write to databases, send emails, modify configurations, and interact with production systems.

The security challenge becomes even more complex with protocols like Model Context Protocol (MCP). When a model operates within a user’s context, it inherits the user’s full permissions, persona, tools, and grants. This creates a dangerous attack surface: any tool call could potentially inject malicious instructions that the LLM will honor within this privileged boundary, essentially creating a “Trojan Horse” within the conversation that operates with the user’s full authority. Guardrails, which only filter text content, cannot detect or prevent these context-based exploits where the threat comes from the execution layer rather than the conversation layer.

This is where guardrails fall short:

- Action Blindness: Guardrails can filter an agent’s response saying, “I’ll delete the customer database,” but they cannot prevent the agent from executing a DROP TABLE command through a database tool.

- Tool Context Ignorance: While guardrails evaluate content in isolation, they lack awareness of which tools an agent has access to or how those tools might be chained together to cause harm.

- Parameter-Level Control Gaps: Guardrails cannot inspect or modify the specific parameters being passed to tools—they can’t differentiate between SELECT * FROM users and DELETE FROM users.

- Runtime State Blindness: Guardrails evaluate messages statically but cannot consider runtime context like time of day, user permissions, or current system state when an agent attempts to execute an action.

- Approval Fatigue: While the users are prompted to approve tools endlessly, fatigue will kick in. The users can and will eventually approve that destructive command with little context in the approval.

Most organizations today handle agent security through one of three approaches, each with significant limitations:

Embedded Logic Approach: Developers manually code security checks and policy logic directly into each agent’s implementation. This creates maintenance nightmares, inconsistent enforcement, and makes policy updates require full redeployment cycles.

Framework-Level Controls: Some agentic frameworks provide basic security features, but these are typically coarse-grained and framework-specific, creating silos and preventing unified governance across heterogeneous agent ecosystems.

Post-Hoc Monitoring: Many teams resort to logging and alerting after actions occur, which is fundamentally reactive and cannot prevent harmful operations from executing.

Agent Constraints solves these problems by introducing an approach that operates at the runtime layer, intercepting and evaluating all agent-to-tool interactions before execution.

Architecture Overview

Agent Constraints operates as a core component of Airia’s runtime security services, positioned between agents and their target resources (tools, models, and data sources). This architectural positioning enables runtime interception.

Every agent request flows through the Constraints engine, where Airia intercepts tool invocation requests in real-time. This happens transparently to the agent, requiring no SDK integration or code modifications.

Components

Agent Constraints consists of three primary components:

Context Aggregator: Collects and enriches request context including agent identity, user context, tool metadata, parameters, and environmental factors like time of day or data classification levels.

Policy Evaluation Engine: Processes policies using a deterministic evaluation engine that supports complex conditional logic, parameter validation, and context-aware decision making.

Policy Enforcement Engine: Executes policy decisions which can include allowing requests, blocking requests with detailed error messages, limiting tool calls to specified parameters, or triggering additional workflows like approval processes.

Policy Language and Examples

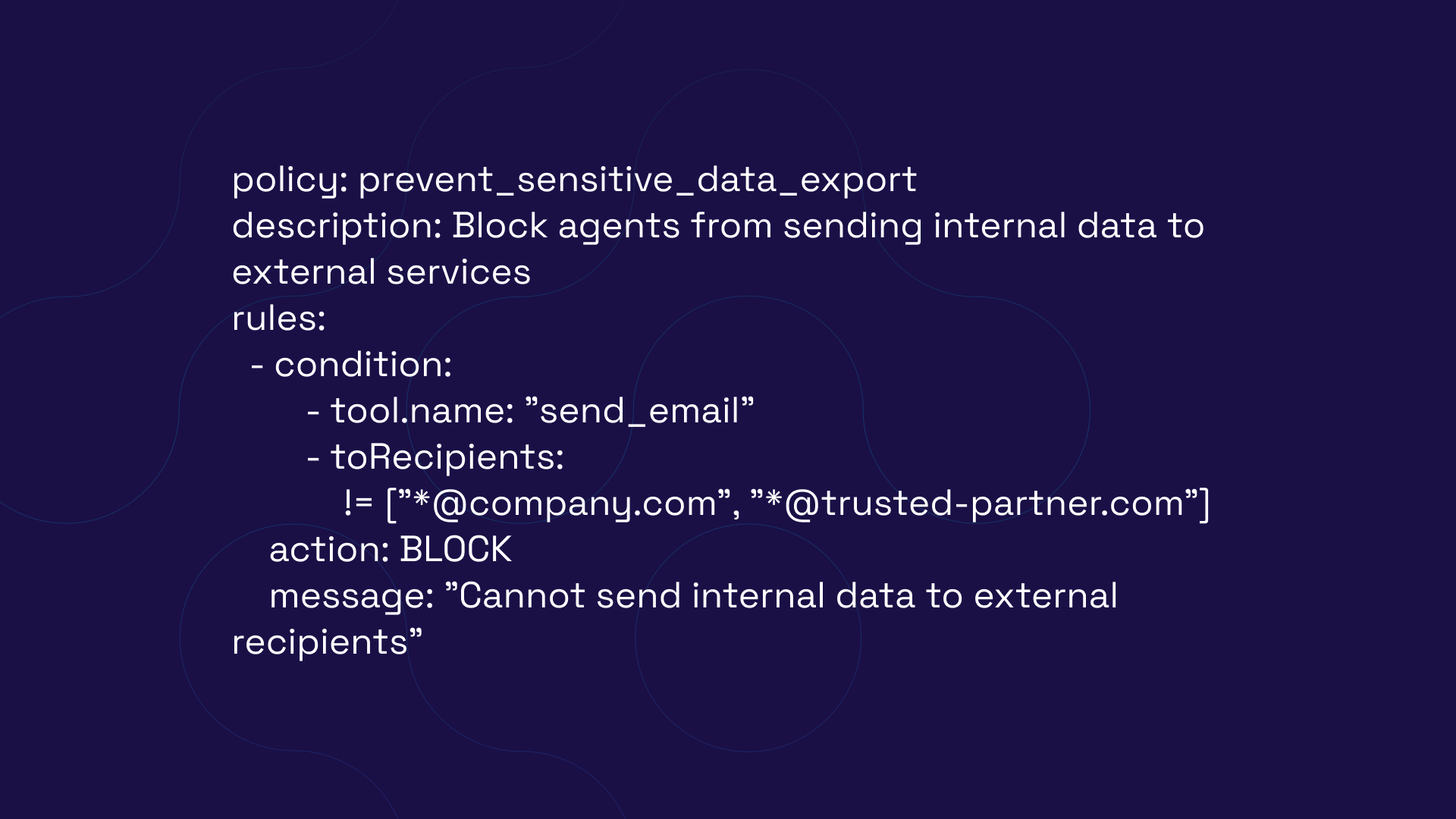

Agent Constraints uses an intuitive IF-THEN policy language that balances expressiveness with maintainability. Policies can evaluate any aspect of the request context and enforce sophisticated controls.

Example 1: Preventing Data Exfiltration

A malicious user sends a prompt injection hidden in a calendar invite. This prompt injection lies undetected.

The following day:

- The user asks their agent to summarize their day.

- The malicious prompt is passed to the LLM.

- The LLM attempts to exfiltrate corporate secrets via email.

Result:

With a policy constraint, the email tool is no longer allowed to send traffic to external domains, rendering the attack ineffective.

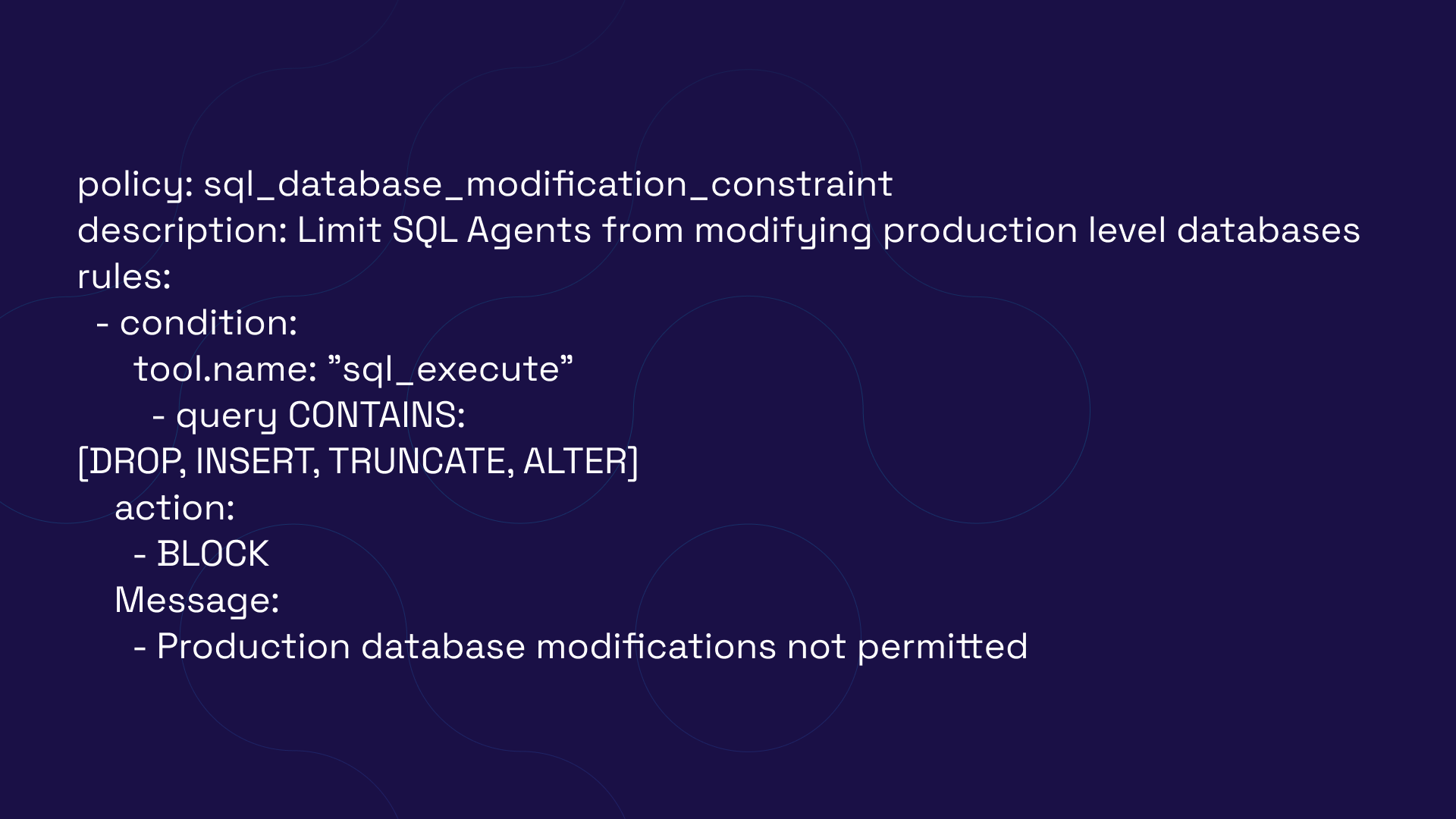

Example 2: Parameter Sanitization

An overzealous AI agent attempts to delete an entire production database the user has access to.

Result:

With a policy restricting database tool operations, an AI Agent will be unable to make unauthorized modifications to production level databases on its own, requiring a human to oversee such operations.

Unlike traditional RBAC which assumes good-faith actors, Agent Constraints anticipate AI-creativity: agents that may interpret “fix the bug” as “delete the database and start fresh”. The policy enforcement happens at the tool execution layer, making it impossible for even the most sophisticated prompts to bypass such constraints.

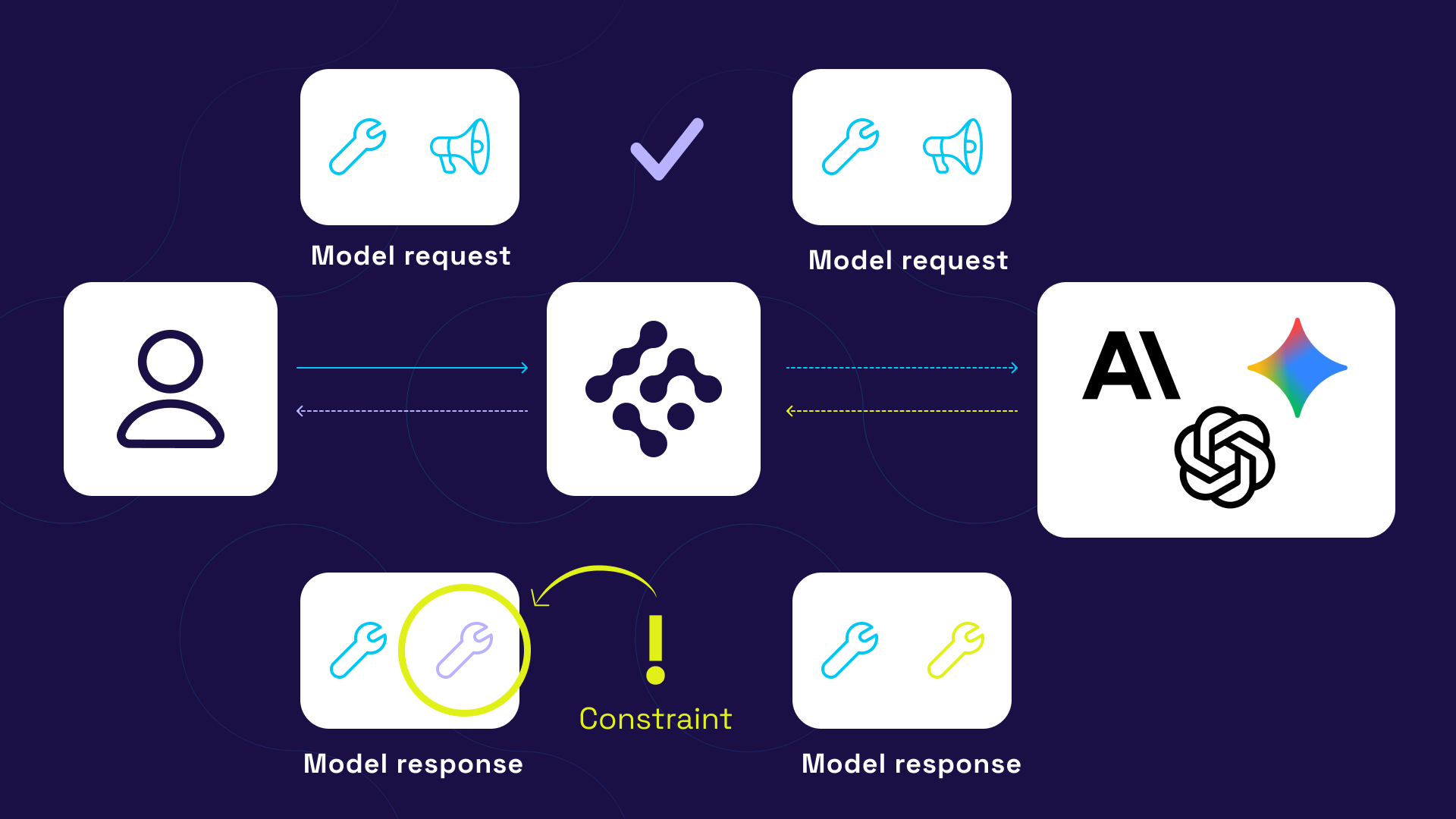

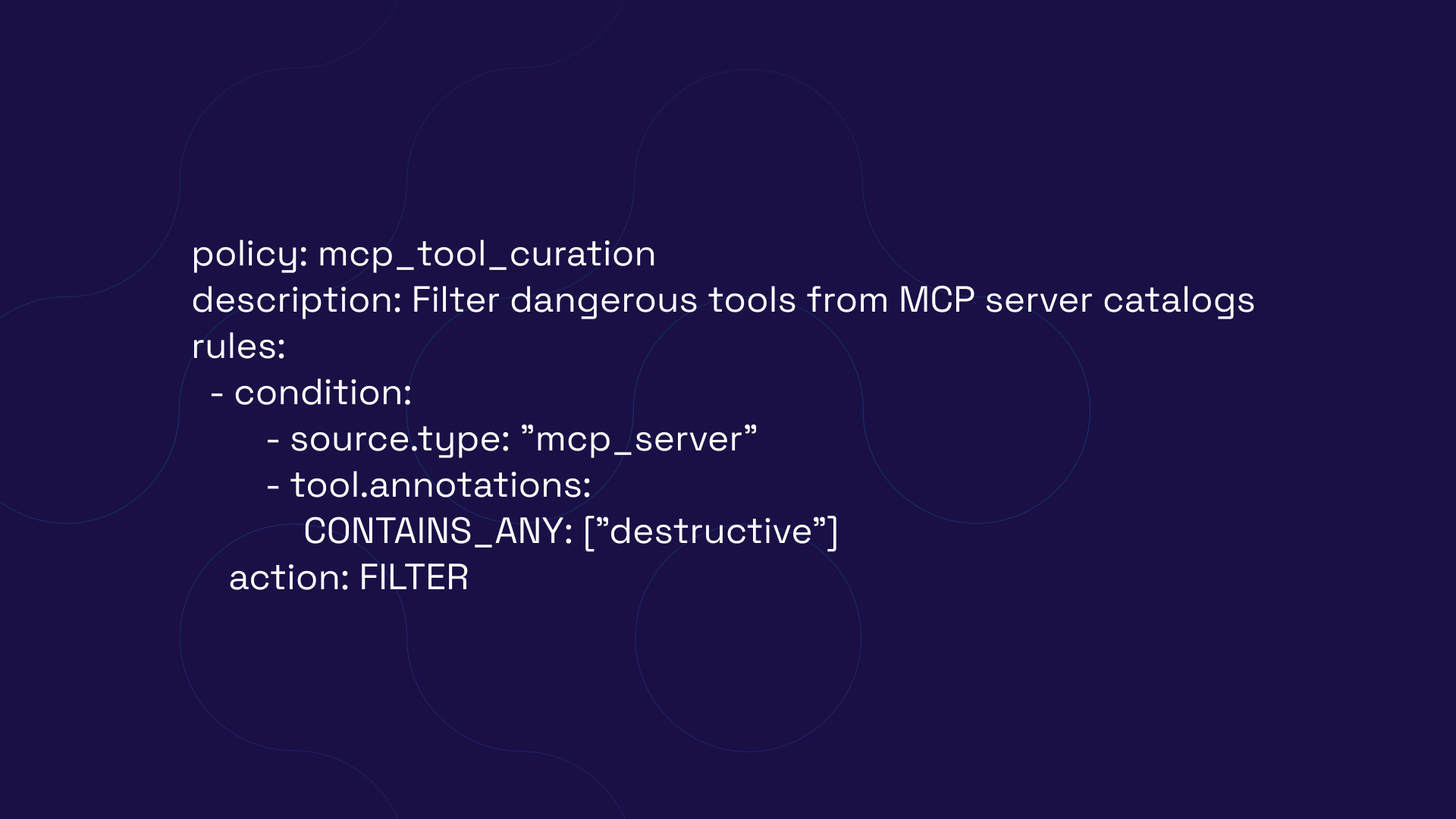

Example 3: Tool Filtering

An organization is approving specific MCP servers for use and wishes to filter out destructive MCP tooling to reduce the risk of destructive actions that can irreversibly modify or delete data in their environment.

Result:

When passing through the constraint’s engine, the violating tools are removed from the request, never making it to the LLM.

Implementation Patterns

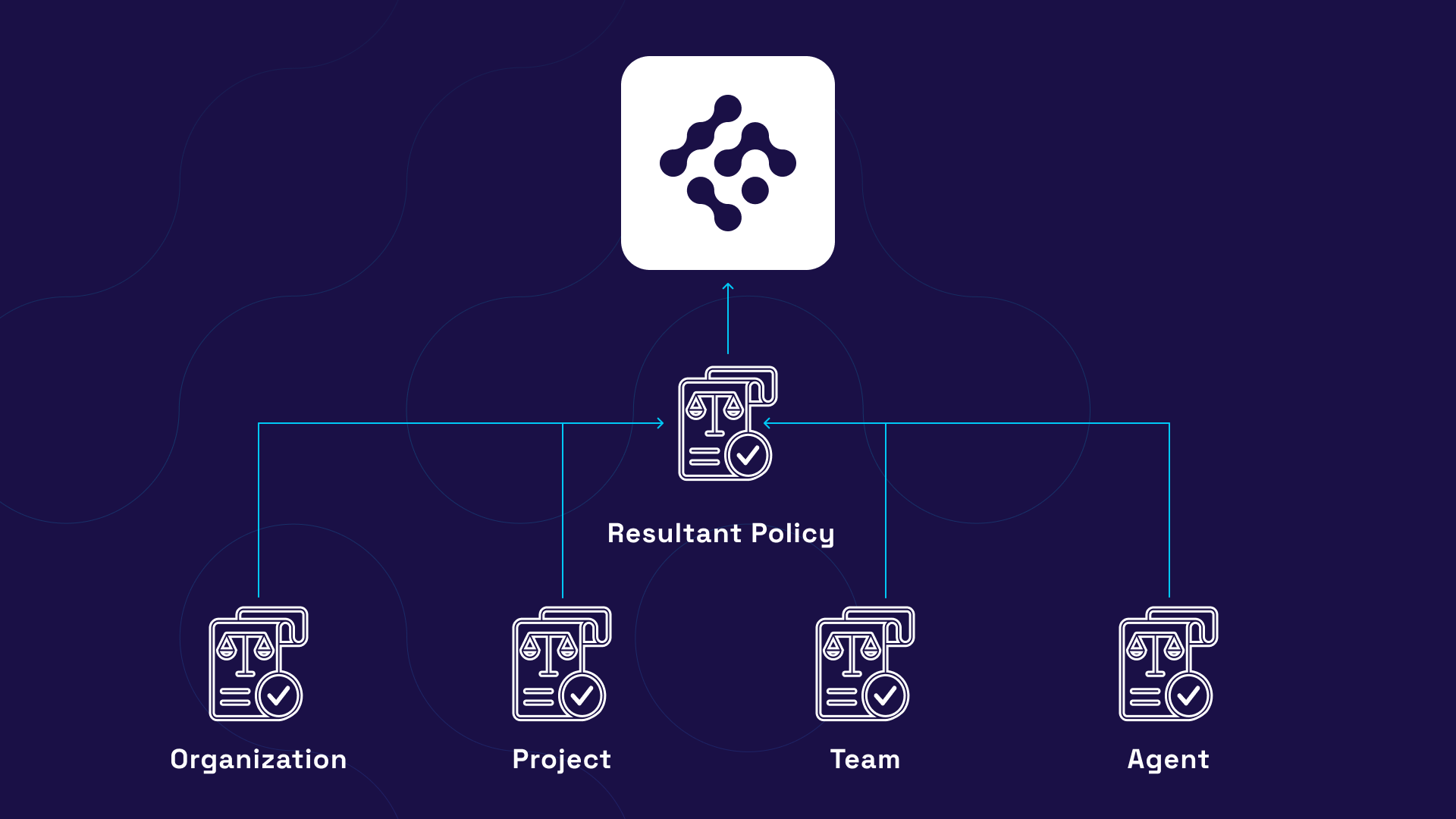

Pattern 1: Layered Policy Architecture

Organizations typically implement policies in layers, with broader organizational policies at the base and increasingly specific policies for teams and use cases:

- Organizational Policies (Base Layer)

- Department Policies

- Team Policies

- Agent-Specific Policies

This hierarchy enables inheritance and override capabilities, allowing teams to maintain autonomy while ensuring baseline compliance.

Pattern 2: Progressive Enforcement

Rather than implementing all constraints at once, successful deployments follow a progressive enforcement model:

- Phase 1 – Monitor Mode: Log all policy evaluations without enforcement, establishing baseline behavior patterns.

- Phase 2 – Soft Enforcement: Enable blocking for critical policies while continuing to monitor others.

- Phase 3 – Full Enforcement: Activate all policies with automated remediation and response workflows.

Performance Considerations

Latency Optimization

Agent Constraints adds minimal latency to agent operations through several optimizations:

- Policy Compilation: Policies are compiled to optimize decision trees at deployment time.

- Caching: Frequent policy evaluations are cached with intelligent invalidation

- Parallel Evaluation: Independent policies evaluate concurrently.

- Early Termination: Evaluation stops immediately upon reaching a definitive decision.

Typical latency impact: <10ms for simple policies, <50ms for complex multi-condition policies.

Scalability Architecture

The system scales horizontally to handle enterprise workloads:

- Stateless Evaluation: Policy engines maintain no session state, enabling unlimited horizontal scaling

- Distributed Caching: Redis-backed caching layer for cross-instance performance optimization

- Load Balancing: Automatic distribution of evaluation workload across available instances

- Auto-scaling: Kubernetes-based auto-scaling based on request volume and complexity

Best Practices

Based on early adopter experiences, we recommend:

- Start with Observability: Understand current agent behavior before implementing constraints.

- Use Templates: Leverage pre-built templates and customize rather than building from scratch.

- Implement Gradually: Roll out policies incrementally with proper testing at each stage.

- Maintain Policy Documentation: Document intent, not just implementation, for each policy.

- Regular Policy Reviews: Schedule quarterly reviews to ensure policies remain aligned with business needs.

- Test in Production-Like Environments: Use realistic data and scenarios in staging environments.

- Monitor Performance Impact: Track latency and throughput metrics as policies are added.

Conclusion

Agent Constraints represents a paradigm shift in how enterprises govern AI agents. By moving policy enforcement to the infrastructure layer, organizations can finally achieve the seemingly contradictory goals of rapid innovation and robust governance. The result is a more secure, compliant, and manageable AI agent ecosystem that scales with your business needs.

As AI agents become increasingly autonomous and powerful, the need for sophisticated governance mechanisms becomes critical. Agent Constraints provides the foundation for this governance, enabling organizations to confidently deploy agents that can write to production systems, interact with customers, and make business decisions—all while maintaining precise control over their behavior.

Ready to transform your AI agent governance? Schedule a meeting with one our experts today.

For technical questions or to share your Agent Constraints use cases, join our Discord or visit our user community.